I gave a recap of Cisco Live Melbourne in another post and had intended on providing a detailed look at each of the sessions I attended as part of that post but it became a bit long-winded so I’ve broken it out into separate posts. I’ve broken the sessions down by each day.

Day 1:

TECCOM-2001 – Cisco Unified Computing System

As someone that is working towards CCNA and CCNP in Cisco Data Center this extra technical seminar really was invaluable and opened my eyes up to a lot of areas that were unknown to me. This breakout session was an 8-hour, full-on overview of Cisco UCS, the components that comprise the solution and how it all works together. It wasn’t a deep-dive session however so if you’ve a really good working knowledge of UCS and know what’s under the covers quite well then this session wouldn’t really be for you. In saying that however I think there’s always opportunities to learn something new.

The session was broken down into 6 parts.

- UCS Overview

- Networking

- Storage Best Practices

- UCS Operational Best Practices

- UCS does Security Admin

- UCS Performance Manager

Some of the main takeaways from the session were around the recent Gen 3 releases for the UCS hardware including the Fabric Interconnects and IOMs. They also discussed the new features for UCS Manager 3.1 code base release. Some of the new features of UCSM and the hardware are listed below:

UCS Manager 3.1

- Single code base (covers UCS mini, M-Series and UCS traditional)

- HTML 5 GUI

- End-to-end 40GbE and 16Gb FC with 3rd Gen FI’s

- M series cartridges with Intel Xeon E3 v4 Processors

- UCS mini support for Second Chassis

- New nVidia M6 and M60 GPUs

- New PCIe Base Storage Accelerators

Next Gen Fabric Interconnects:

FI6332:

- 32 x 40GbE QSFP+

- 2.56Tbps switching performance

- IRU & 4 Fans

FI6332-16UP:

- 24x40GbE QSFP+ & 16xUP Ports (1/10GbE or 4/8/16Gb FC)

- 2.43Tbps switching performance

IOM 2304:

- 8 x 40GbE server links & 4 x 40GbE QSFP+ uplinks

- 960Gbps switching performance

- Modular IOM for UCS 5108

Two other notes from this section of the technical session were that the FI6300s requires UCS Manager 3.1(1) and the M-Series is not support on the FI6300’s yet. There was also an overview of the UCS Mini upgrades, the Cloud Scale and Composable Infrastructure (Cisco C3260) and the M-Series. I’ve not had any experience or knowledge of the M-Series modular systems before and I need to do far more reading to understand this much better.

The second part of the session covered MAC pinning and the differences between the IOMs and Mezz cards. (For those that don’t know the IOMs are pass-through and the Mezz are PCIe cards). Once aspect they covered which I hadn’t heard about before was around UDLD (Uni-Directional Link Detection) which monitors the physical connectivity of cables. UDLD is point-to-point and uses echoing from FIs out to neighbouring switches to check availability. It’s complementary to Spanning Tree and is also faster at link detection. UDLD can be set in two modes, default and aggressive. In Default mode UDLD will notify and let spanning tree manage pulling the link down and in Aggressive mode UDLD will bring down link.

The Storage Best Practices looked at the two modes that FIs can be configured to and also the capabilities of both settings. If you’re familiar with UCS then there’s a fair change you’ll know this already. The focus was on FC protocol access via the FIs and how the switching mode changes how the FIs handle traffic.

FC End-Host Mode (NPV mode):

- Switch sees FI as server with loads of HBAs attached

- Connects FI to northbound NPIV enabled FC switch (Cisco/Brocade)

- FCIDs distributed from northbound switch

- DomainIDs, FC switching, FC zoning responsibilities are on northbound switch

FC Switching Mode:

- Connects to Northbound FC switch and normal FC switch (Cisco Only)

- DomainIDs, FC Switching, FCNS handled locally

- UCS Direct connect storage enabled

- UCS local zoning feature possible

The session also touched on the storage heavy C3260 can be connect to FIs as an appliance port. It’s also possible via UCSM to create LUN policies for external/local storage access. This can be used to carve up the storage pool of the C3260 into usable storage. Once thing I didn’t know what that a LUN needs to have an ID of 0 or 1 in order for boot from SAN to work. It just won’t work otherwise. Top tip right there. During the storage section there was some talk about Cisco’s new HyperFlex platform but most of the details were being withheld until the breakout session on Hyper-Converged Infrastructure later in the week.

The UCS Operational Best Practice session covered off primarily how UCS objects are structured and how they play a part in pools and and policies. For those already familiar with UCS there was nothing new to understand here. However, one small tidbit I walked away with was around pool exhaustion and how UCS recursively looks up to parent organisation until root and even up to the global level if UCS central is deployed or linked. One other note I took about sub-organisations were that they can go to a maximum of 5 levels deep. Most of the valuable information from this session was around the enhancements in latest version of UCSM updates. These were broken down into improvements in firmware upgrade procedures, maintenance policies and monitoring. Most of these enhancements are listed here:

Firmware upgrade improvements:

- Baseline policy for upgrade checks – it checks everything is OK after upgrade

- Fabric evacuation – can be used to test fabric fail-over

- Server firmware auto-sync

- Fault suppression (great for upgrades)

- Fabric High Availability checks

- Automatic UCSM Backup during AutoInstall

Maintenance:

- On Next boot policy added

- Per Fabric Chassis acknowledge

- Reset IOM to Fabric default

- UCSM adapter redundant groups

- Smart call home enhancements

Monitoring:

- UCS Health Monitoring

- I2C statistics and improvements

- UCSM policy to monitor – FI/IOM

- Locator LED for disks

- DIMM backlisting and error reporting (this is a great feature and will help immensely with troubleshooting)

Fabric evacuation can be used to test fabric fail-over before firmware upgrade to ensure bonding of NICs works correctly and ESXi hosts fail-over correctly to second vNIC. There’s also a new tab for health also beside the FSM tab in UCSM.

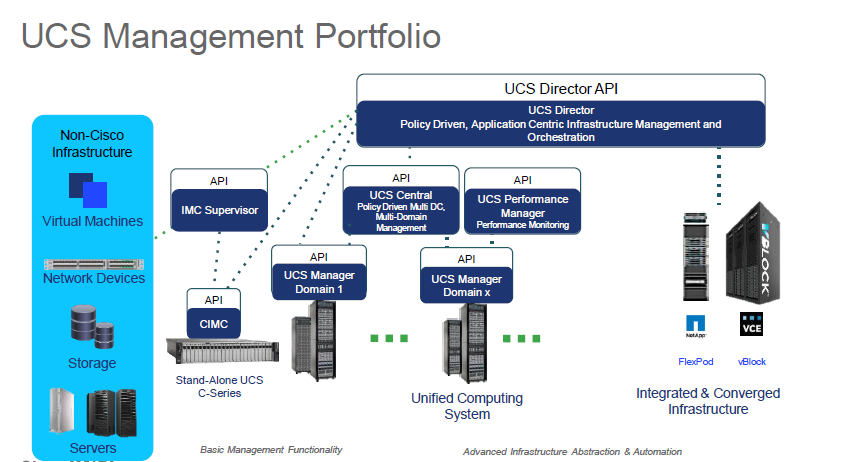

The last two sections of the session I have to admit were not really for me. I don’t know whether it was just because it was late in the day, my mind was elsewhere or that I was just generally tired but I couldn’t focus. The sections on Security within UCSM and UCS Performance Manager may well have been interesting on another day but they just didn’t do anything for me. The information was somewhat basic and I really felt that UCS Performance Manager was really more of a technical sales pitch. I feel the session would have been better served with looking at more high-level over-arching tools for management such as UCS Director rather than a monitoring tool which the vast majority of people are not going to use anyway.

Overall though this entire technical session was a great learning experience. The presenters were very approachable and I took the opportunity to quiz Chris Dunk in particular about the HyperFlex solution. While I may not attend another UCS technical session again in the future I would definitely consider stumping up the extra cash needed for other technical session which may be more relevant to me then. There’s a lot of options available.

After the sessions were completed I headed down to the World of Solutions opening and wandered around for a bit. As I entered I was offered an array of free drink. Under other circumstances I would have jumped at the chance but I’m currently on a 1-year alcohol sabbatical so I instead floated around the food stand that had the fresh oysters. The World of Solutions was pumping. I didn’t really get into any deep conversations but I did take note of which vendors were present and who I wanted to interrogate more later in the week. I left well before the end of the reception so I could get home early. The next day was planned to be a big day anyway.

On further investigation I found that this is a known bug when upgrading to versions 2.2(2) and above. I was upgrading from version 2.2(1d) to 2.2(3d). Despite being a critical alert the issue does not impact any services. The new UCSM software is looking for new features on the FI that do not exist yet as it has not been upgraded. As soon as you upgrade the FIs this critical alert will go. More information about the bug can be found Cisco’s support page for the bug

On further investigation I found that this is a known bug when upgrading to versions 2.2(2) and above. I was upgrading from version 2.2(1d) to 2.2(3d). Despite being a critical alert the issue does not impact any services. The new UCSM software is looking for new features on the FI that do not exist yet as it has not been upgraded. As soon as you upgrade the FIs this critical alert will go. More information about the bug can be found Cisco’s support page for the bug