Cloud Computing, or ‘The Cloud’, has become ubiquitous over the past couple of years. A term that was coined and took off within the IT community has taken hold among the general populace and even your granny will be on about storing information in the cloud. But which cloud is the right one? There’s so many different definitions of ‘The Cloud’ that I’d be writing for the next week just to go through them alone. For me, the keys to cloud involve scalability, shared-resources, automation, software/profile driven and provide a self-service function.

For the majority of end-users ‘The Cloud’ is where you store extra backups of your photos or documents on your laptop/smartphone which allows you to both recover those items if needed or access them quickly and easily on another device. Creating a seamless user experience between devices is key for these types of solutions. However for IT professionals it’s not only about these two features.

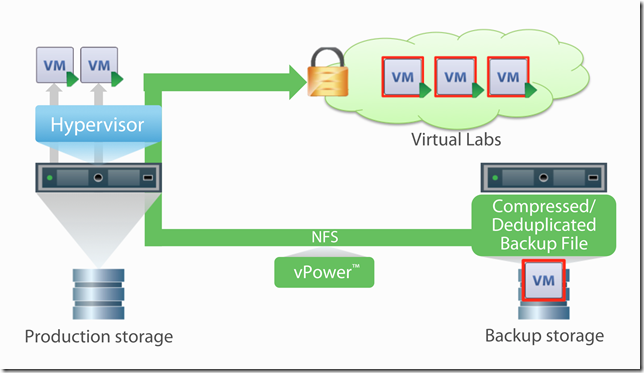

Cloud technology provides organisations the opportunity to expand their infrastructure and platforms quickly and dynamically while moving the cost model from CAPEX to OPEX. A situation that management are happy to see occur. The use-cases for using cloud technology are numerous but generally center around backup, disaster recovery, test and development, scalable applications and more recently virtual desktop infrastructure (VDI). The options for cloud have been defined as Public, Private and Hybrid. Public Cloud has hit the market hard during the past 3 to 4 years and has the backing of IT heavyweights like Microsoft (Azure), IBM (Softlayer), Google (Google Cloud Platform) and a relative newcomer but absolute beast Amazon (Amazon Web Services). For web-based applications or even start-up companies public cloud is a great way to go as it’s easy to scale and the infrastructure was designed more specifically for modular applications. The shining example of growth, AWS, has a success story with Netflix. Netflix grew rapidly and if it was running on a traditional platform it’s growth would definitely have been limited. However, thanks to using the public cloud to stream their content to the end users it was possible to satisfy the demand for Netflix and now Netflix is responsible to 80% of Sunday night internet traffic in the United States, all running from AWS and managed by a minimal support staff.

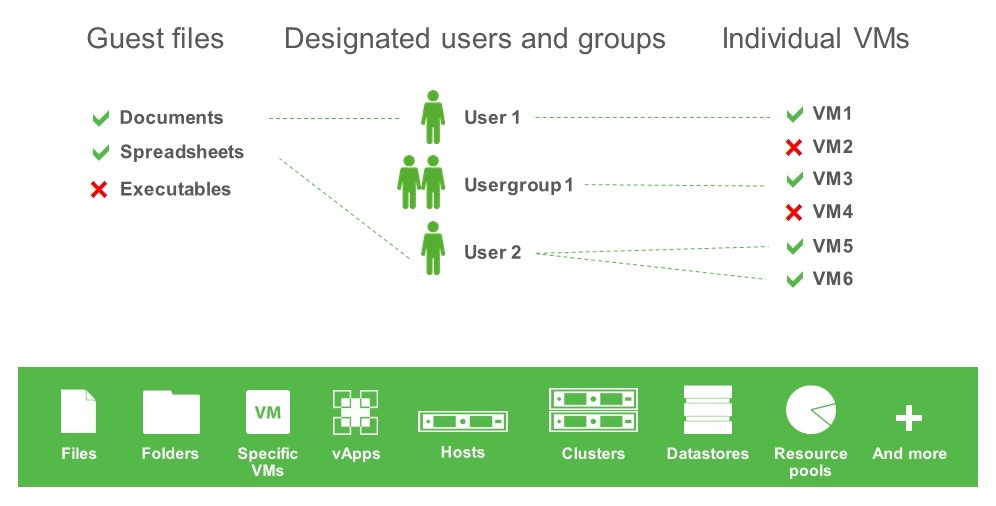

The majority of companies will have their own Private cloud or will at least be moving in that direction by having a fully virtualised platform. However, virtualisation is not the same as Cloud. A lot a people make that mistake. Virtualisation provides the mechanisms to allow cloud technology to exist. It leverages the physical resources into a shared virtual resource pool that allows greater utilisation of compute, storage and network resources. Where most Private Clouds fall down is in the area of automation and self-service provisioning. There are a large number of infrastructure providers that fit into the Private Cloud space. Cisco & Netapp teamed up to create Flexpod which has been an extremely popular and has helped Cisco become the leading blade infrastructure provider globally in just 5 years. Cisco UCS’s policy driven platform has helped organisations quickly and easily scale their infrastructure using templates. This has been seen to be the ‘legacy infrastructure’, a term I don’t necessarily agree with because to me it’s a nice marketing term. In just the past 2 years there’s been a huge shift in the storage market that has impacted how storage is now delivered. Hyper-converged infrastructure has been growing rapidly with Nutanix leading the charge. Other similar systems such as the recently announced VMware EVO:RAIL and even Simplivity, which has teamed up with Cisco earlier this year, are creating waves in how infrastructure is delivered. They all bring storage closer to the compute layer and modularise RAM, CPU, Networking and Storage into one unit so that growth is easily scalable at a low-cost entry point. The hyper-converged platforms are definitely going to change how Private Cloud is deployed and managed in the coming years. And the upside to these new players on the market is that some of the older, larger players in the IT market have had a virtual kick in the arse. So expect lots more innovation in the future.

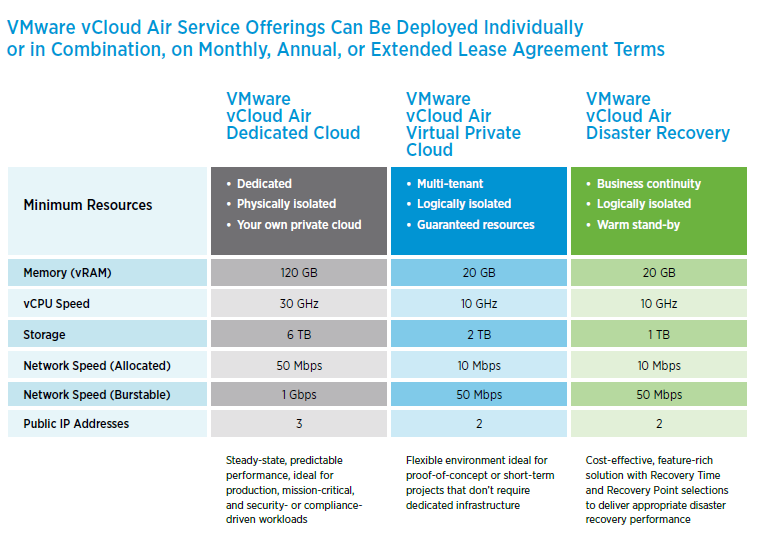

Hybrid cloud has been on a similar trajectory to hyper-converged platforms in the past 2 years or so, on the up and up in terms of popularity. There are many reasons for organisations to utilise the capacity, scalability and resources of public cloud platforms but usually security reasons, internal politics, infrastructure complexities or even application restrictions mean that it’s not possible to move the entire production infrastructure. However, having the opportunity to open up your environment to leverage some public resources in a private capacity and under the control and security of just one IT team. As an engineer at a company that suffers from tombstone applications (applications that have been left in the environment with no owners and no responsible person but cannot be moved or upgraded) and valid security concerns around sensitive data the only option regarding cloud technology is Hybrid. I’m currently looking into our options around this and some very recent announcements have really tweaked my interest. I plan to go into more detail around some of these over the coming weeks but some worth a look at VMware’s new vCloud Air announcement and also NetApp Cloud OnTap and Private Storage.

So if you’ve read this far you have to right to ask if I’ve told you which Cloud you should choose. I haven’t. I can’t tell you that. There’s just far too many options. Nowadays IT, and in particular Cloud, is the equivalent of a menu at the Cheesecake Factory, absolutely immense and when the waiter asks what you want you blindly point at the menu hoping that you get something you wouldn’t mind eating as you couldn’t get past reading the second page. Cloud is just the same. So many options, not enough time. You really have to analyse your environment, your requirements and your desired roadmap so that you can match the type of cloud you need.