Edit: I have since completed a successful Metrocluster Failover Test and it is documented in full in the following blog posts:

- NetApp 7-Mode MetroCluster Disaster Recovery – Part 1

- NetApp 7-Mode MetroCluster Disaster Recovery – Part 2

- NetApp 7-Mode MetroCluster Disaster Recovery – Part 3

Just before the Xmas break I had to perform a Metrocluster DR test. I don’t know why all DR tests need to be done just before a holiday period, it always seems to happen that way. Actually I do know but it doesn’t make the run up to the holidays any more comfortable for an IT engineer. Before I began the DR test I had a fairly OK knowledge of how MetroCluster worked but afterwards it’s definitely vastly improved. If you want to learn how MetroCluster works and how it can be fixed I’d recommend breaking your environment and work with NetApp support to fix it again. Make sure to put aside quite a bit of time so that you can get everything working again and your learning experience will be complete. (You may have problems convincing your boss to let you break your environment though). I haven’t worked with MetroCluster before so while I understood how it worked and what it could do I really didn’t understand the ins-and-outs and how it is different to a normal 7-Mode HA-cluster. The short version is that it’s not all that different but it is far more complex when it comes to takeovers and givebacks and just a bit more sensitive also. During my DR test I went from a test to an actual DR and infrastructure fix. Despite the problems we faced the data management and availability was rock solid and we suffered absolutely no data loss.

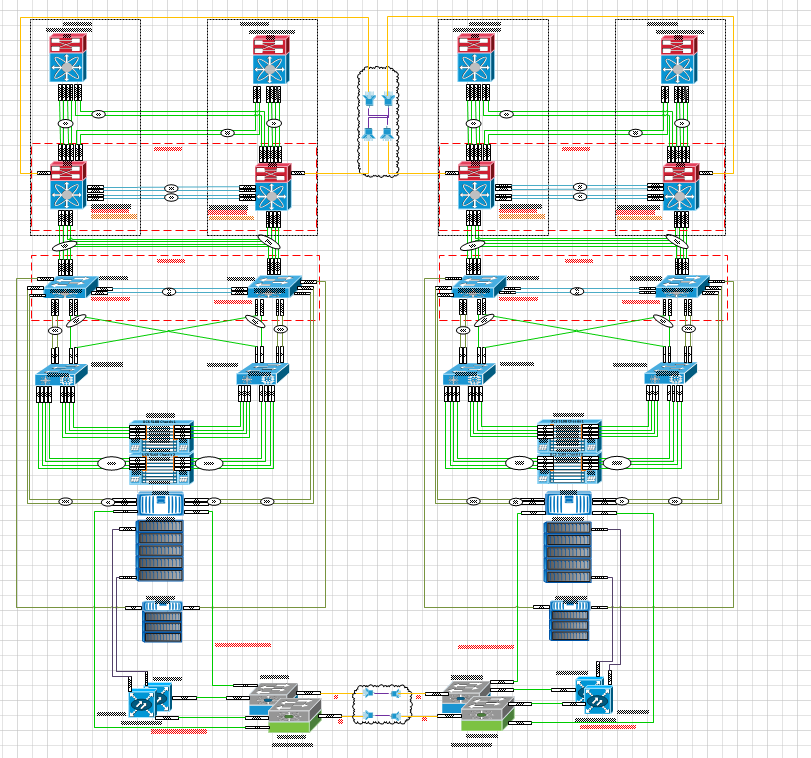

I won’t go deeply into what MetroCluster is and how it works here, I may cover that in a separate blog post, but the key thing to be aware of is that the aggregates are classed as Plexes and use SyncMirror to ensure that all writes in a primary Plex gets synchronously written to the secondary Plex so that all data exists in to places. SyncMirror differs from SnapMirror by synchronizing the aggregrates whereas SnapMirror occurs at the volume level. MetroCluster itself is classed as a disaster avoidance system and satisfied this by having multiple copies of synchronised data on different sites. The MetroCluster in our environment is part of a larger Flexpod environment which includes fully redundant Cisco Nexus switches, Cisco UCS chassis and blades and a back-end dark fibre network between sites. A 10,000 foot view of the environment looks something like the below diagram and I think you can agree that there are a lot of moving parts here.

So what exactly happened during the DR test and why did I lose a storage controller? Well I can out my hand up for my part in the failure and the other part comes down to NetApp documentation which is not clear as to what operations needs to be performed and in what sequence. As I’ll discuss later there’s quite a need for them to update their documentation on MetroCluster testing. The main purpose of this post is not to criticise MetroCluster but for me to highlight the mistakes that I made and how they contributed to the failure that occurred and what I would do differently in the future. It’s more of a warning for others to not make the same mistakes I did. Truth be told if the failure occurred on something that wasn’t a MetroCluster I may have been in real trouble and worst of all lost some production data. Thankfully MetroCluster jumped to my rescue on this front.

What were the DR requirements?

The requirements for the DR test was to simulate a full site failure and perform failover from the secondary site to the primary site for all VMs and storage. This failover had to meet RTO and RPO objectives set out by our Quality Assurance team. Once all systems were running successfully on one site a failback was due to be performed and that was also subject to the same RTO and RPOs. That covered off one half of testing as there was a requirement to perform failover of the primary to the secondary and back again. Please note that while I’m saying primary and secondary it is just to differentiate between the sites as in the case of MetroCluster both sites are active. During testing I didn’t get to the second half and had to terminate any further testing until I got all systems back online. This was also the first failure test of the infrastructure since it’s implementation which deal with more component failure testing and failover with no real data. The full infrastructure has been in production for 9 months, 3 of which I’ve been involved in.

Where did it go wrong?

There is only one technical document available for MetroCluster failover testing that is recommended by NetApp and that is what we used to create our test plan and test cases for the DR test. This document – “TR-3788 -A Continuous-Availability Solution for VMware vSphere and NetApp. Using VMware High Availability and Fault Tolerance and NetApp MetroCluster:, is the only reference document from NetApp. As part of our test we were following section 5.2 – Failures that affect an Entire Data Center. The steps for simulation of a failure according to the document are:

I took the decision to not power down the disk shelves as the ISL would already be broken and the storage controller was going to be powered off. This was a mistake (mistake number 1) on my part as I believe not doing this caused some physical disk failures in two separate shelves on that site. Unfortunately I was unaware of this failure as autosupport was turned off during the DR test. Even now we don’t know if the disks were failed before the test began or if it occurred as part of the shutdown and takeover process. While I can’t show the exact test cases I can however go through the steps taken to perform the failover and the giveback.

Before I began the failover I migrated all VMs to hosts in the primary site and logged onto the UCS Manager to shutdown the blades in the chassis on that site. As part of this I also migrated vCenter server to a datastore in the primary side. I wanted to keep access to vCenter throughout the test as trying to recover that on top of any potential failures would just be a pain in the ass. Once vCenter was migrated I began the failover in earnest.

On the Cisco MDS fibre switch I shut down the ISL connection from the console. I logged on directly to the switch as an admin and ran the following commands:

show int brief

config t

interface fc1/41

shutdown

Next I logged onto the secondary storage controller and ran the command aggr status -r to check on the current status of the arrays. Next I logged onto the Service Processor for the secondary storage controller via SSH and ran the halt command to shutdown the storage controller. The primary storage controller picked up that the secondary controller was not contactable. At the point I ran the command cf forcetakeover -d. 17 minutes later and the primary site was now running the whole environment. There were errors about being able to access the mailbox disk but even now I don’t know if that was due to an issue accessing the secondary site (expected response) or the primary site (really bad news if that’s the case).

At this point I ran system health checks and got the applications teams to test some of the critical applications. All was going well but I knew that the critical point was always going to be the giveback. So once the green light was received from the team I began the giveback process. This should have been a straight forward giveback command. The first step to bringing the environment online bit by bit. According to the TR document the following were the advised steps:

As we didn’t have the shelves powered off I could skip step on. I logged onto the MDS switch and re-enabled the switch port using the following commands

show int brief

config t

interface fc1/41

no shutdown

Next I logged onto the Service Processor of the secondary controller and ran boot_ontap to bring the controller back online. I was then able to log onto the storage controller and it came up with the message ‘Waiting for Giveback’. At this point all was looking ok and as expected. As we use boot from SAN for our ESX hosts we were unable to boot them back up as per step 3 until the giveback had finished. I ran a cf status and saw that the secondary controller was waiting for giveback. Next I ran cf giveback. This failed. It just mentioned that it could not perform the giveback. At this point I juggled around the idea of what may have caused this and it is here that I made mistake number 2. I ran the command cf giveback -f. At this point the primary controller left takeover mode and the secondary controller began to go into a continuous boot cycle. This is where the tears and fears mentioned in the title came in. It was one of those moments where you know you have just gone past the point of no return and feel like kicking yourself. This just made a bad situation worse. I had previously done cf giveback -f on another client site in 7-mode in order to perform the giveback. As I mentioned earlier MetroCluster is a bit more complex and sensitive and for want of a better phrase the storage controller shat itself. After the initial cf giveback failure I should have checked for the following as per https://library.netapp.com/ecmdocs/ECMP1210206/html/GUID-E37EC334-C009-4A6A-82A8-BE383D6D5567.html:

Check whether any of the following processes were taking place on the takeover node at the same time you attempted the giveback:

- Advanced mode repair operations, such as wafliron

- Aggregate creation

- AutoSupport collection

- Backup dump and restore operations

- Disks being added to a volume (vol add)

- Disk ownership assignment

- Disk sanitization operations

- Outstanding CIFS sessions

- Quota initialization

- RAID disk additions

- Snapshot copy creation, deletion, or renaming

- SnapMirror transfers (if the partner is a SnapMirror destination)

- SnapVault restorations

- Storage system panics

- Volume creation (traditional volume or FlexVol volume)

It also mentions that when cf giveback fails to look for cf.giveback.disk.check.fail in the console but this message did not appear. This message ensures no disks had failed and both nodes were able to detect the same disks. However, this message didn’t appear. The only processes I can think of are a snapshot may have just completed at the same time I ran the giveback command or that the secondary controlled panicked at giveback and dropped the storage.

What issues were experienced?

At this point I opened a P1 support called with NetApp and began the process of trying to my environment back up and running. There are a number of issues that we suffered but the main problem was that the primary controller was no longer in takeover mode, the secondary controller was completely offline and in a boot cycle and any VMs which were sitting on storage that was being services from the secondary controller (or at least should have been after the giveback) were frozen. One of the VMs that was frozen was also the VM that ran our proxy pac so we lost internet access and could not connect with NetApp support via webex so I was flying blind and just sending logs and screen captures to the support team and following their steps over the phone.

Normally the concern with with a takeover or a giveback is that you can suffer from split brain. This didn’t happen in our case. We only had one set of data active. During the troubleshooting of the issue with Netapp we found that two disks had failed, one each in different disk shelves on the secondary site and also that the MDS switch was reporting that all connections to the storage controller were down. This was because it was caught in a boot cycle. Initially we couldn’t work out why it was in a boot cycle but finally we found that the root volume was seeing two volumes which shared the same FSIDs and they were both taken offline as the controller could not tell which on was the active volume. This is where the different with MetroCluster and normal 7-mode comes into play. Because the plexes are mirrored when the takeover is done it basically breaks the SyncMirror and promotes the synchronised plexes and data to allow writes. At this point all is going well as there’s no FSID clashes. However when the giveback occurred there ended up being two root volumes with the same FSID and at this point the controller just thought bugger this I’m out of here. The fix for this problem was to go into maintenance mode and delete the second root volume, names root_volume(1). This also had to be done for the other data volume just to clear things up which also had a (1) after its name. On reboot of the storage controller this time OnTap loaded correctly. This immediately unlocked the frozen VMs and the links on the MDS fibre switch were showing up as active.

I would like to add that from the point of giveback failure to the secondary storage controller rebooting took almost 8 hours of work with the support team. But we weren’t out of the woods yet. We let things settle for a bit and then tackled the next issue which was that the controller was reporting as being in mixed-HA mode rather than just HA mode. This was due to one of the IO Modules not connecting properly to allow the storage loop to enable HA mode. The A module on a disk shelf was reseated, giving it 45 seconds between taking out and replacing, and this resolved the issue with the HA mode, now it was in HA mode and not mixed-HA mode.

The next problem we had is that 10 disks had logically failed within the 3 raid groups in Plex 2 (data plex) in the primary site. After a lot of investigation we ran the disk unfail <disknumber> command on each of the disks and this brought the disks online and allowed them to rebuild. The next part of the process was to resynchronise to the Plex on the opposite site. This came as a real surprise as we were now seeing problems on the primary site as well. NetApp support advised that disks can logically fail if the MDS connection is down. This was exactly the case when the secondary controller would not boot up and it’s connection on the MDS was inactive. The primary site panicked and logically failed the disks. The process to unfail, rebuild and resynchronise took almost 36 hours but once that had completed all systems were back to normal. The UCS blades were brought back online and VMs migrated back to their original location through the DRS rules.

This is where the joy comes in. After seeing the controller flatline for a number of hours and just dealing with one issue after another it felt like I’d be looking for a new job very soon. When the controller came back online and all data was synchronised we had still managed to satisfy the RTO and RPOs even from a real disaster. And there was absolutely no data loss which was the most impressive thing. The applications team checked all apps that were affected and they all gave the thumbs up. I breathed a huge sigh of relief at this point.

What would you do in the future?

I have since received a root cause analysis report from NetApp and they mention two problems. One is that the disk shelves were not powered off. As I mentioned already I take responsibility for that and this may have stopped the disks from physically failing, although there’s no guarantee of that. The other is that the Hardware Assist ports on the Service Processor tried to do initiate a takeover rather than waiting for Data OnTap to recognise that the partners heartbeat had stopped and that the Service Processors on both sites could still communicate with each other when the force takeover process was started. To me this reads as, next time turn off the disk shelves and sever all connections simultaneously. Which is what the NetApp recommendation is to do. They say that all connections should be simultaneously shutdown and essentially perform a DR, no simulation or test, just perform the failover as if it was a real DR. Given that we don’t get the opportunity to pull the power to the data center, and have to deal with whatever that throws up, the only real option is to shutdown the PDUs in the NetApp rack and pull the ISL link and OOB Service Processor links at the same time. NetApp have also recommended that when you’re about to run the cf giveback command to open a P1 with support and get them to walk through it with you. I believe this is something that NetApp will recommend going forward as my MetroCluster DR test was not the only one to fail in the past few weeks in Australia alone.

The first thing we have to do is update the Test Plan, our work instructions for performing the test and the test cases themselves. Also, when I reschedule the test we’ll request NetApp review the test cases in advance and also that there is an engineer on-site when the tests are being carried out. I also won’t be so rash in dropping the cf giveback -f command on a MetroCluster controller in the future. And i’ll ensure the disk shelves are powered down.

As you may have noticed I will take responsibility for my part in the failure but NetApp also need to step up to the plate and acknowledge that documentation regarding MetroCluster specific failovers is severely lacking. It’s basic to the point of being extremely high level and does not factor in any step by step process that should be followed. Even following the steps in the TR document precisely would have left us with issues as it is a sequential shutdown of components and not the simultaneous shutdown that NetApp have now recommended. I think for the benefit of everyone some clear, concise documentation on this sort of procedure would be greatly appreciated. Even if this documentation just mentions that NetApp Support should be contacted prior to the MetroCluster failover.

Will you be replacing MetroCluster?

Hell no! It’s bomb proof. I managed to drop a Derek-bomb on the thing and it survived to tell the tale. There was definitely a period where I questioned whether it would come back or not and also if I had made the correct career decisions but the simple fact that despite losing a controller, some disks, issues with IO modules and logical disk failures there was no data loss, applications all came back online and the only real impact on 3 VMs was that during the vMotion between sites the vNICs disconnected, which has nothing to do with MetroCluster as it’s a VMware thing.

As I mentioned at the outset I have a far better understanding of MetroCluster now and also a great appreciation for what it can do. The plans for the next DR test are already in motion and I’ll document that process in full (hopefully a fully working process) once the test is completed. Maybe next time there’ll just be joy and no tears or fears. Although I think I’ll have a fear of cf giveback -f for the remainder of my life.

Pingback: NetApp 7-Mode MetroCluster Disaster Recovery – Part 3 « Virtual Notions

Pingback: NetApp 7-Mode MetroCluster Disaster Recovery – Part 1 « Virtual Notions